Why did ACT suddenly reverse course and ditch the 1-36 score for ACT Writing?

ACT announced on June 28th, 2016 that as of the September 2016 test date ACT Writing scores will change once more. One of the most critical purposes of a test scale is to communicate information to the score user. In that regard, the 1-36 experiment with Writing was a failure. ACT has admitted that the scale “caused confusion” and created a “perceptual problem.” It is not yet clear if the new change — piled onto the class of 2017’s already large load of changes — will lessen the criticism that it has been receiving.

Is the test changing?

The essay task is not changing, and two readers will still be assigning 1-6 scores in four domains. ACT states, “Some language in the directions to the students has been modified to improve clarity.” It has not yet clarified what the clarification will be.

How will scores be reported going forward?

The basic scoring of the essay will remain unchanged, but the reporting is being overhauled. Two readers score each essay from 1-6 in four domains — Ideas & Analysis, Development & Support, Organization, and Language Use & Conventions. A student can receive a total of 8 to 48 points from the readers. On the test administrations from September 2015 to June 2016 this “raw score” was converted to a 1-36 scale to match the scaling process used in the primary ACT subject tests. The mean, distribution, and reliability, however, were fundamentally different for Writing than for English, Math, Reading, and Science. ACT should not have used the same scale for scores that behaved so differently.

The proposed change is to go back to a 2-12 score range used prior to September 2015. In yet another confusing twist, though, the 2-12 score range for 2016-2017 is very different than the one used in 2014-2015. Whereas the old ACT essay score was simply the sum of two readers’ holistic grades, the new 2-12 score range is defined as the “average domain score.” The average is rounded to the nearest integer, with scores of .5 being rounded up. For example, a student who receives scores of {4, 4, 4, 5} from Reader 1 and {4, 4, 5, 4} from Reader 2 would receive domain scores of {8, 8, 9, 9}. The student’s overall Writing score would be reported as a 9 (34/4 = 8.5).

Will the ELA score change?

In September 2015 ACT began reporting an ELA score that was the rounded average of English, Reading, and Writing scores. They also began reporting a STEM score that was the rounded average of Math and Science. Now that the Writing score is no longer on the 1-36 scale, it would seem that the ELA scoring would need to change. Except that ACT doesn’t want it to change. In effect, they are preserving the 1-36 scaling of Writing buried within the ELA calculation. “If you can’t see it, you can’t be confused” seems to be the message from ACT. Needless to say, we feel that the confusion exists on the other end of the line.

What does this mean regarding my plans to re-test in September? Will my February scores be converted into the new 2-12 scores?

In general, students should not be making re-testing plans based solely around ACT Writing scores. If you were planning on repeating the ACT in the fall, the score reporting change should not change your mind. If you are satisfied with your scores, you should tune out any hubbub surrounding the new reporting.

ACT has produced example student, high school, and college score reports corresponding to the September 2016 updates (some subscore categorizations are also changing!). ACT will continue to report scores by individual test date (College Board, in contrast, will report all of a student’s scores with each report). A student’s old 1-36 scores will not be changed.

If this is just a reporting change, how could it impact my ranking in any way?

This is where things start to get really confusing. There are any number of intersecting issues: percentiles (new, old, 1-36, 2-12, ELA), concorded scores, the scaling of individual test dates, and rounding artifacts.

What sort of problems can occur with averaging and rounding domain scores?

With the 1-36 scale, there were obviously 36 potential scores (although not all test forms produced all scores). On the 2-12 reporting, only 11 scores are possible. The tight range of scores typically assigned by readers and the unpredictability of those readers means that reader agreement is the exception rather than the norm. Even small grading differences can create large swings. For example, a student with scores of {4, 4, 3, 4} and {4, 4, 3, 3} would have a total of 29 points or an average domain score of 7 (7.25 rounded down). Had the student received even a single additional point from a single reader on a single domain, her scores would have added to 30 points and averaged 8 (7.5 rounded up). This seemingly inconsequential difference in reader scoring is the difference between the 84th percentile and the 59th percentile. And her readers were in close agreement!

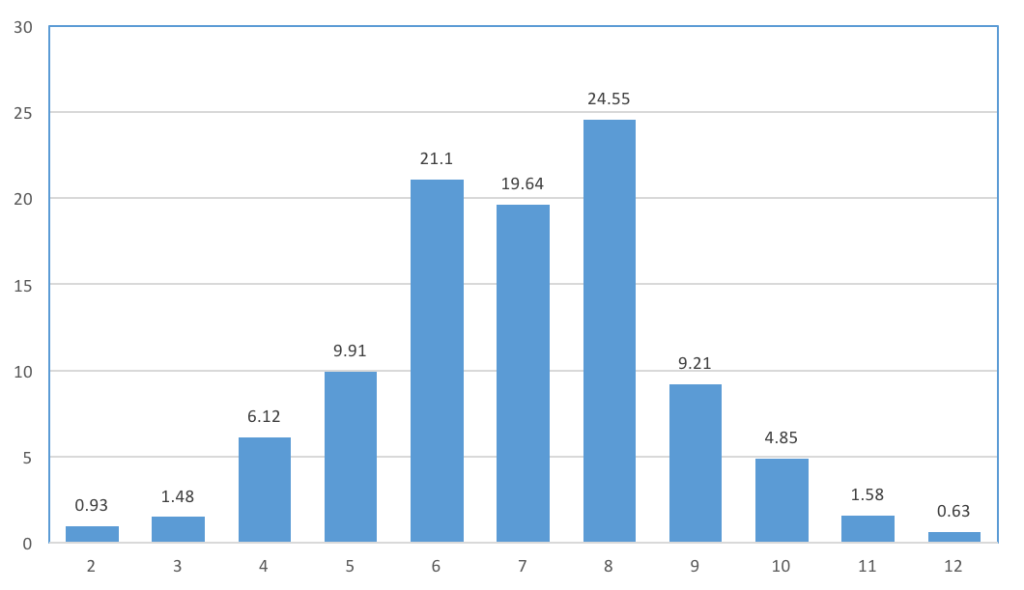

ACT Writing scores are clustered around the mid-range. Readers gravitate toward giving 3s, 4s, and 5s. According to ACT’s percentile data for the 2-12 reporting (see below), 65% of students’ 2-12 scores will be 6s, 7s, or 8s.

| Average Domain Score | Cumulative Percent |

|---|---|

| 2 | 1 |

| 3 | 2 |

| 4 | 9 |

| 5 | 18 |

| 6 | 40 |

| 7 | 59 |

| 8 | 84 |

| 9 | 93 |

| 10 | 98 |

| 11 | 99 |

| 12 | 100 |

Visually, You can see how compact the range is, as well. Less than 5% of test-takers receive a 1, 2, 11, or 12.

Isn’t it a good thing that the essay is reported in gross terms rather than pretending to be overly precise?

Yes and no. The essay is a less reliable instrument than the other ACT tests and far less reliable than the Composite score. A criticism of the 1-36 scale for Writing was that it pretended a level of accuracy that it could not deliver — it is, after all, only a single question. What the change cannot do, however, is remake the underlying fundamentals of the test. The bouncing around of scoring systems has made ACT encourage the use of percentiles in gauging performance.

Percentiles cannot improve the reliability of a test. Percentiles cannot improve the validity of a test. Percentiles — like scaled scores — can easily provide a false sense of precision and ranking. To understand how this would work, take the most extreme example — completely random scores from 2 to 12. Even though there would be no value behind those scores, someone receiving a score report would still see a 7 as the 55th percentile and a 9 as the 73rd percentile. The student with the 9 clearly did better, right? Except that we know the scores were just throws at the dartboard. The percentile difference seems meaningful, but it is just noise.

ACT Writing scores are not random (although it may sometimes seem that way), but the test’s reliability is well below that of other ACT subjects. Percentiles are not the silver bullet of score interpretation. In a display of misleading precision, ACT released cumulative percents tallied to the hundredths place for a test that will have only 11 score buckets and a standard error of measurement of 1. College Board, by contrast, has opted to provide no norms for its new essay scores.

What percentiles should students and colleges use and believe?

Another problem with percentiles is that they are dependent upon the underlying pool of testers. It is interesting to note that students performed better on the ACT essay than ACT originally estimated. That may seem like a good thing, but it means that the newly released percentiles are more challenging. A 22, for example, was reported as 80th percentile when the 1-36 scale was introduced in 2015. The newly released data, however, shows a 22 as the 68th percentile.

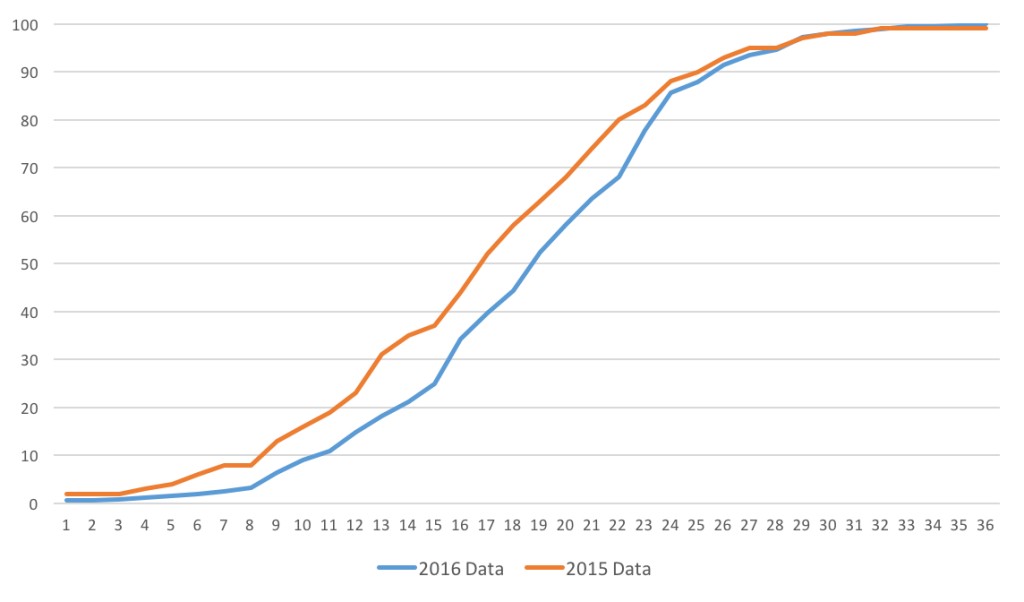

The chart below shows how graphs of the cumulative percentiles differ across the range. This difference is unrelated to the new scoring — it’s the difference between the numbers ACT had been touting from its pilot study versus the actual results from the last year of testing. It’s clear on a number of counts that ACT misjudged student performance. They either set the mean below where it should have been (20-21 to match the other subjects), or they tried to set it in the right place and were waylaid by readers grading more harshly than expected. The last option is the most difficult to believe — they set the mean well below the other subject means, not realizing the confusion that would be caused. It’s unknown how the percentile figures may have evolved over time. Was it an advantage or a disadvantage to have more experienced readers by the February and April tests?

Students making judgments about scores would have had no way of knowing the actual score distribution. In fact, they would have received the incorrect percentile tables with their reports. Presumably the new tables will be used when presenting scores to colleges, although ACT has not yet clarified that point. The table below shows new and old percentile figures and the difference.

| ACT Writing Score 1-36 Scale | Cumulative Percent (Stated Summer 2016) | Cumulative Percent (Stated Summer 2015) | Difference |

|---|---|---|---|

| 1 | 1 | 2 | 1 |

| 2 | 1 | 2 | 1 |

| 3 | 1 | 2 | 1 |

| 4 | 1 | 3 | 2 |

| 5 | 2 | 4 | 2 |

| 6 | 2 | 6 | 4 |

| 7 | 3 | 8 | 5 |

| 8 | 3 | 8 | 5 |

| 9 | 7 | 13 | 6 |

| 10 | 9 | 16 | 7 |

| 11 | 11 | 19 | 8 |

| 12 | 15 | 23 | 8 |

| 13 | 18 | 31 | 13 |

| 14 | 21 | 35 | 14 |

| 15 | 25 | 37 | 12 |

| 16 | 34 | 44 | 10 |

| 17 | 40 | 52 | 12 |

| 18 | 44 | 58 | 14 |

| 19 | 52 | 63 | 11 |

| 20 | 58 | 68 | 10 |

| 21 | 64 | 74 | 10 |

| 22 | 68 | 80 | 12 |

| 23 | 78 | 83 | 5 |

| 24 | 86 | 88 | 2 |

| 25 | 88 | 90 | 2 |

| 26 | 91 | 93 | 2 |

| 27 | 94 | 95 | 1 |

| 28 | 95 | 95 | 0 |

| 29 | 97 | 97 | 0 |

| 30 | 98 | 98 | 0 |

| 31 | 98 | 98 | 0 |

| 32 | 99 | 99 | 0 |

| 33 | 99 | 99 | 0 |

| 34 | 100 | 99 | -1 |

| 35 | 100 | 99 | -1 |

| 36 | 100 | 99 | -1 |

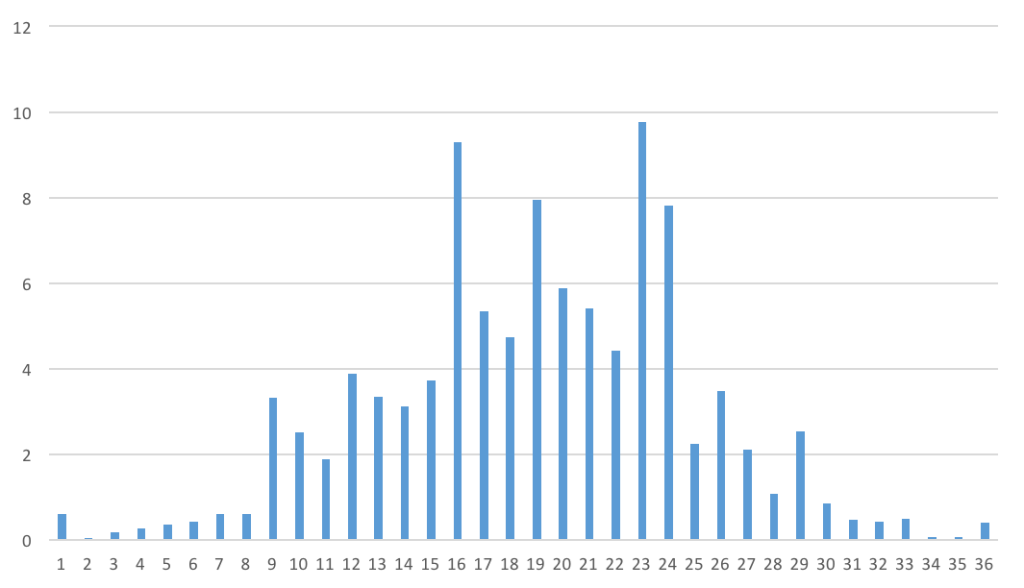

The visualization of the student numbers at each score shows how haphazard things are. Because of how the test is scored and scaled, certain scores dominate the results. Among the various form codes, almost 1 in 10 test takers ended up with 23 and another 8% at 24. These happen to be common scores when high Composite scoring students complain about “low” Writing scores. The low- to mid-20s is not that out of character for a 30+ student.

Will colleges use ACT’s concordance or calculate the average domain score? Will the results always be the same?

By trying to give a variety of ways of thinking about Writing scores, ACT seems to be confusing matters more in its “5 Ways to Compare 2015–2016 and 2016–2017 ACT Writing Scores” white paper. If there are so many ways to compare scores, which one is right? Which one will colleges use? Why don’t they all give the same result?

Most students are familiar with the concept that different raw scores on the English, Math, Reading, or Science tests can produce different scaled scores. The equating of forms can smooth out any differences in difficulty from test date to test date. When ACT introduced scaling to the Writing test, it opened up the same opportunity. In fact, we have seen that the same raw score (8-48) on one test can give a different result on another test. Not all prompts behave in the same way, just as not all multiple-choice items behave in the same way. This poses a problem, though, when things are reversed. Suddenly ACT is saying to “ignore all that scaling nonsense and just trust our readers.” Trusting the readers helped get ACT into this mess, and ignoring the scaling is hard to do when an estimated one million students have already provided scaled Writing scores to colleges.

Because of the peculiarities of scaling and concordances, the comparison methods that ACT suggests of calculating a new 2-12 score from an old score report versus using a concordance table can produce differing results.

On the April 2016 ACT, a student with reader scores of {4, 3, 4, 3} and {4, 4, 4, 3} would have a raw score of 29 and would have received a scaled score of 21. In order to compare that score to the “new” score range, we could simply take the rounded average of the domain scores and get 7 (29/4 = 7.25).

An alternative provided by ACT is to use the concordance table (see below). We could look up the 21 scaled score the student received and find that it concords to a score of 8.

Same student, same test, same reader scores, different result. Here is where percentiles can give false readings, again. The difference between a 7 and an 8 is the difference between 59th percentile and 84th percentile. That’s a distressing change for a student who already thought she knew exactly where she had scored.

It would seem as if directly calculating the new 2-12 average would be the superior route, but this neglects to account for the fact that some prompts are “easier” than others — the whole reason the April scaling was a little bit different than the scaling in September or December. There is no psychometrically perfect solution; reverting to a raw scale has certain trade-offs. We can’t unring the bell curve.

Below is the concordance that ACT provides to translate from 1-36 scaled scores to 2-12 average domain scores.

| Scaled 1-36 Score | Concorded 2-12 Score |

|---|---|

| 1 | 2 |

| 2 | 2 |

| 3 | 2 |

| 4 | 3 |

| 5 | 3 |

| 6 | 3 |

| 7 | 3 |

| 8 | 4 |

| 9 | 4 |

| 10 | 4 |

| 11 | 5 |

| 12 | 5 |

| 13 | 5 |

| 14 | 6 |

| 15 | 6 |

| 16 | 6 |

| 17 | 6 |

| 18 | 7 |

| 19 | 7 |

| 20 | 7 |

| 21 | 8 |

| 22 | 8 |

| 23 | 8 |

| 24 | 8 |

| 25 | 9 |

| 26 | 9 |

| 27 | 9 |

| 28 | 10 |

| 29 | 10 |

| 30 | 10 |

| 31 | 11 |

| 32 | 11 |

| 33 | 11 |

| 34 | 12 |

| 35 | 12 |

| 36 | 12 |

Will I still be able to superscore? What will colleges do?

Students faced with great composite scores and weak essay scores have faced a re-testing dilemma. Many have hoped that more colleges would announce superscoring of Writing scores. Unfortunately, the scoring change does nothing to alleviate the dilemma. By making it even harder for colleges to have a uniform set of scores in its files, the new reporting decreases the likelihood that Writing scores from 2015-2016 will be superscored with those from 2016-2017. Would colleges superscore the rounded, domain average? The concorded score? What ACT has effectively precluded them from doing is using the 1-36 scale as the benchmark for all scores. In the long-run the demise of the 1-36 essay score is a good thing. In the short-run, it leaves the class of 2017 with even more headaches.

Will I still be able to get my test rescored?

ACT has not announced any changes to its rescoring policy. You can request a rescore of your essay within 3 months of your test date for a $50 fee. The fee is refunded if your score is changed. Scores will never be lowered due to a rescore.

ACT almost certainly took note of the increased requests it was receiving for rescores and the increased number of refunds it was issuing for changed scores. The shift to 2-12 scoring makes it somewhat less likely that a rescore will result in a change (fewer buckets). Students can work with their school counselor to obtain a copy of their essay and decide if a rescore is merited.

Is this even about college admission?

Not really, but ACT won’t admit it. Less than 15% of colleges will require ACT Writing from the class of 2017; most put little weight on it; and the nature of Writing scores means that the distinctions between applicants rarely have meaning.

The real target is the state and district consumer. The difference between a 7 and an 8 might not indicate much about an individual student, but if one large high school in your district averages 7.2 and another averages 7.8, the difference is significant. Domain scores may be able to tell state departments of education how their teachers and students are performing in in different curricular areas. Increasingly, states and districts are paying for students to take the ACT (or SAT) in order to make all students “college ready” or to fulfill testing mandates. ACT and College Board view this as a growth opportunity that potentially extends across all of K-12.

The school and district consumers care more about converting scores because the longitudinal data matters. They want to be able to compare performance over time and need a common measuring stick.

The sudden introduction of the new scaling in September 2015 and the sudden reversal for September 2016 has undercut the credibility of a test that colleges had already viewed dubiously. For two classes in a row admission offices have had to interpret two different sets of scores from the same students. They will be facing the third type of Writing score before they had a chance to adjust to the second. We expect colleges to trust the ACT Composite and test scores that they have used for decades and to take a wait-and-see attitude toward essay scores.

Hello Art,

I’m a little confused on the ELA scoring on my son’s ACT. He received a 35 English, 36 Reading and 10 Writing but the ELA shows a 34? Lastly, my son is looking a top computer science programs. His composite score was a 35 but his math was a 33 and science a 34…will this hurt him for top schools or should he retake the ACT to score higher. Thank you.

James,

The Writing score acts as a third score in the ELA mix. There is an implicit 1-36 scaled score. In this case, the 10 is a very good score, but it would be equivalent to about a 31. Average of 35, 36, and 31 is 34. Confused? You can safely ignore the ELA, since colleges will, too.

The second part is difficult to answer. Higher is better, but there is a diminishing return. If he is applying to STEM programs or mainly to colleges that superscore the ACT, I might suggest shooting for even higher scores. Otherwise, his 35 has already put him in the top quartile of admitted students at any college.

Hi Art,

My son got a composite score of 36 in act. His essay score is 10. Being an Asian does he need to retake for the essay scores since he wants to apply to Ivy’s that require essay scores.please advice

Thanks

Lattu,

His 36 is wonderful and even the top colleges have 25th-75th percentile range scores of 8-10 on the essay. It would not make sense for him to repeat the test.

If a student got 11 for essay but 26 on ACT and student want to re-test ACT to get the better score, does student need to retake the essay too? Or could he keep only essay score?

Ej,

The University of California system will only consider scores taken from a single test date, and it requires the essay. I know of no other school that has this combination of conditions. You should be fine skipping the Writing section.